4. Query Language Guide

4.1. Preface

4.1.1. Overview

This guide provides information about how to use the rasdaman database management system (in short: rasdaman). The document explains usage of the rasdaman Query Language.

Follow the instructions in this guide as you develop your application which makes use of rasdaman services. Explanations detail how to create type definitions and instances; how to retrieve information from databases; how to insert, manipulate, and delete data within databases.

4.1.2. Audience

The information in this manual is intended primarily for application developers; additionally, it can be useful for advanced users of rasdaman applications and for database administrators.

4.1.3. Rasdaman Documentation Set

This manual should be read in conjunction with the complete rasdaman documentation set which this guide is part of. The documentation set in its completeness covers all important information needed to work with the rasdaman system, such as programming and query access to databases, guidance to utilities such as raswct, release notes, and additional information on the rasdaman wiki.

4.2. Introduction

4.2.1. Multidimensional Data

In principle, any natural phenomenon becomes spatio-temporal array data of some specific dimensionality once it is sampled and quantised for storage and manipulation in a computer system; additionally, a variety of artificial sources such as simulators, image renderers, and data warehouse population tools generate array data. The common characteristic they all share is that a large set of large multidimensional arrays has to be maintained. We call such arrays multidimensional discrete data (or short: MDD) expressing the variety of dimensions and separating them from the conceptually different multidimensional vectorial data appearing in geo databases.

rasdaman is a domain-independent database management system (DBMS) which supports multidimensional arrays of any size and dimension and over freely definable cell types. Versatile interfaces allow rapid application deployment while a set of cutting-edge intelligent optimization techniques in the rasdaman server ensures fast, efficient access to large data sets, particularly in networked environments.

4.2.2. Rasdaman Overall Architecture

The rasdaman client/server DBMS has been designed using internationally approved standards wherever possible. The system follows a two-tier client/server architecture with query processing completely done in the server. Internally and invisible to the application, arrays are decomposed into smaller units which are maintained in a conventional DBMS, for our purposes called the base DBMS.

On the other hand, the base DBMS usually will hold alphanumeric data (such as metadata) besides the array data. Rasdaman offers means to establish references between arrays and alphanumeric data in both directions.

Hence, all multidimensional data go into the same physical database as the alphanumeric data, thereby considerably easing database maintenance (consistency, backup, etc.).

Figure 4.1 Embedding of rasdaman in IT infrastructure

Further information on application program interfacing, administration, and related topics is available in the other components of the rasdaman documentation set.

4.2.3. Interfaces

The syntactical elements explained in this document comprise the rasql language interface to rasdaman. There are several ways to actually enter such statements into the rasdaman system:

By using the rasql command-line tool to send queries to rasdaman and get back the results.

By developing an application program which uses the raslib/rasj function

oql_execute()to forward query strings to the rasdaman server and get back the results.

Developing applications using the client API is the subject of this document. Please refer to the C++ Developers Guide or Java Developers Guide of the rasdaman documentation set for further information.

4.2.4. rasql and Standard SQL

The declarative interface to the rasdaman system consists of the rasdaman Query Language, rasql, which supports retrieval, manipulation, and data definition.

Moreover, the rasdaman query language, rasql, is very similar - and in fact embeds into - standard SQL. With only slight adaptations, rasql has been standardized by ISO as 9075 SQL Part 15: MDA (Multi-Dimensional Arrays). Hence, if you are familiar with SQL, you will quickly be able to use rasql. Otherwise you may want to consult the introductory literature referenced at the end of this chapter.

4.2.5. Notational Conventions

The following notational conventions are used in this manual:

Program text (under this we also subsume queries in the document on hand) is

printed in a monotype font. Such text is further differentiated into

keywords and syntactic variables. Keywords like struct are printed in boldface;

they have to be typed in as is.

An optional clause is enclosed in brackets; an arbitrary repetition is indicated

through brackets and an asterisk. Grammar alternatives can be grouped in

parentheses separated by a | symbol.

Example

selectresultListfromcollName[ [ as ]collAlias] [ ,collName[ [ as ]collAlias] ]* [ wherebooleanExp]

It is important not to mix the regular brackets [ and ] denoting array

access, trimming, etc., with the grammar brackets [ and ] denoting

optional clauses and repetition; in grammar excerpts the first case is in

double quotes. The same applies to parentheses.

Italics are used in the text to draw attention to the first instance of a defined term in the text. In this case, the font is the same as in the running text, not Courier as in code pieces.

4.3. Terminology

4.3.1. An Intuitive Definition

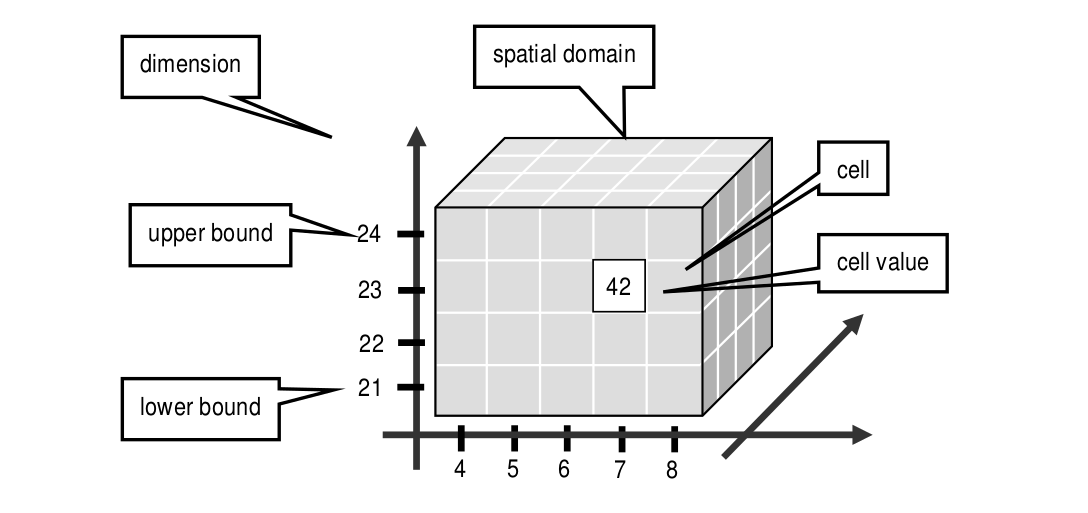

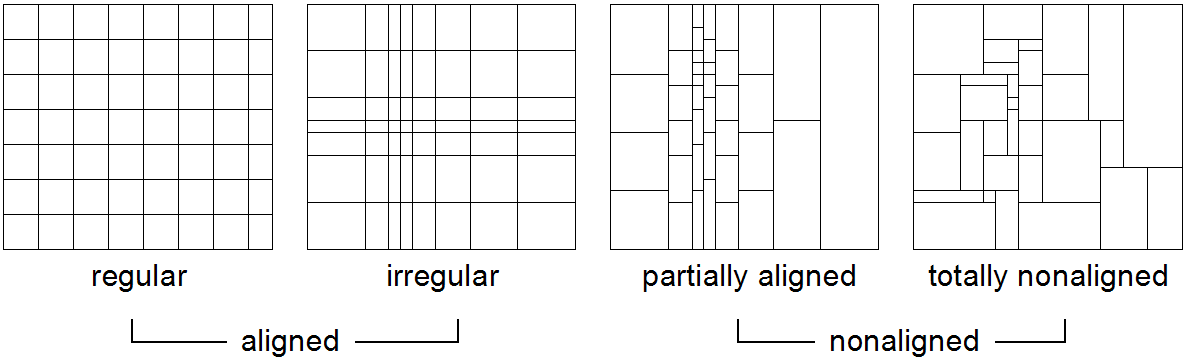

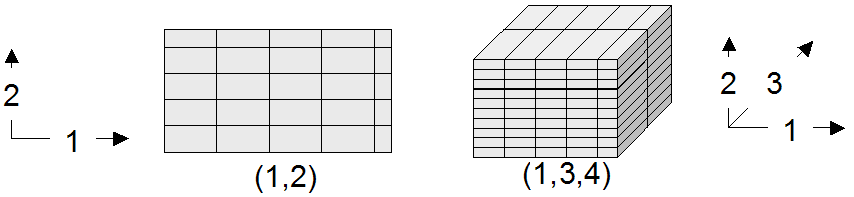

An array is a set of elements which are ordered in space. The space considered here is discretized, i.e., only integer coordinates are admitted. The number of integers needed to identify a particular position in this space is called the dimension (sometimes also referred to as dimensionality). Each array element, which is referred to as cell, is positioned in space through its coordinates.

A cell can contain a single value (such as an intensity value in case of grayscale images) or a composite value (such as integer triples for the red, green, and blue component of a color image). All cells share the same structure which is referred to as the array cell type or array base type.

Implicitly a neighborhood is defined among cells through their coordinates: incrementing or decrementing any component of a coordinate will lead to another point in space. However, not all points of this (infinite) space will actually house a cell. For each dimension, there is a lower and upper bound, and only within these limits array cells are allowed; we call this area the spatial domain of an array. In the end, arrays look like multidimensional rectangles with limits parallel to the coordinate axes. The database developer defines both spatial domain and cell type in the array type definition. Not all bounds have to be fixed during type definition time, though: It is possible to leave bounds open so that the array can dynamically grow and shrink over its lifetime.

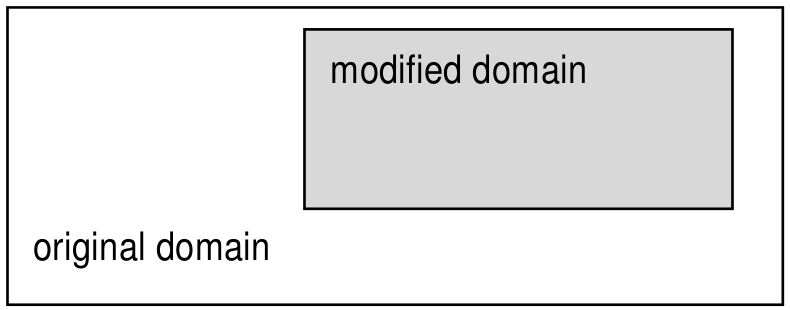

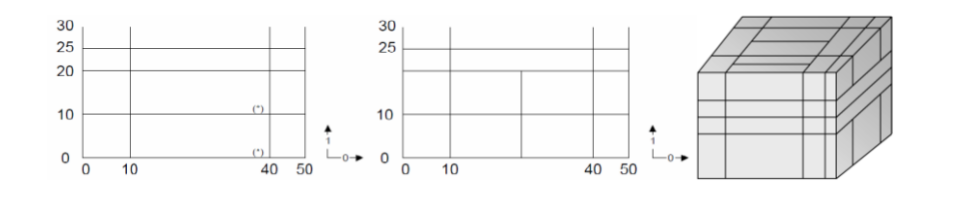

Figure 4.2 Constituents of an array

Synonyms for the term array are multidimensional array / MDA, multidimensional data / MDD, raster data, gridded data. They are used interchangeably in the rasdaman documentation.

In rasdaman databases, arrays are grouped into collections. All elements of a collection share the same array type definition (for the remaining degrees of freedom see Array types). Collections form the basis for array handling, just as tables do in relational database technology.

4.3.2. A Technical Definition

Programmers who are familiar with the concept of arrays in programming languages maybe prefer this more technical definition:

An array is a mapping from integer coordinates, the spatial domain, to some data type, the cell type. An array’s spatial domain, which is always finite, is described by a pair of lower bounds and upper bounds for each dimension, resp. Arrays, therefore, always cover a finite, axis-parallel subset of Euclidean space.

Cell types can be any of the base types and composite types defined in the ODMG standard and known, for example from C/C++. In fact, most admissible C/C++ types are admissible in the rasdaman type system, too.

In rasdaman, arrays are strictly typed wrt. spatial domain and cell type. Type checking is done at query evaluation time. Type checking can be disabled selectively for an arbitrary number of lower and upper bounds of an array, thereby allowing for arrays whose spatial domains vary over the array lifetime.

4.4. Sample Database

4.4.1. Collection mr

This section introduces sample collections used later in this manual. The sample database which is shipped together with the system contains the schema and the instances outlined in the sequel.

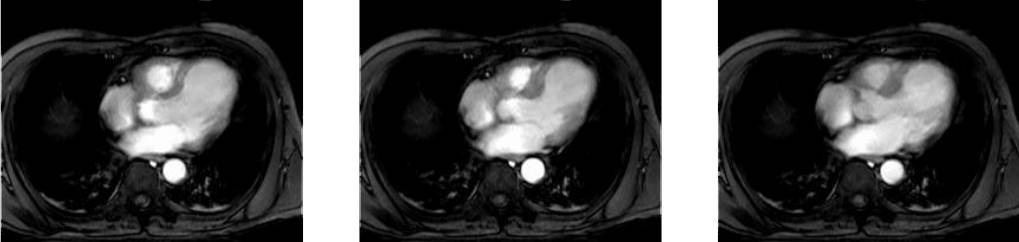

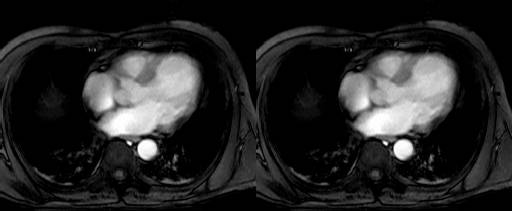

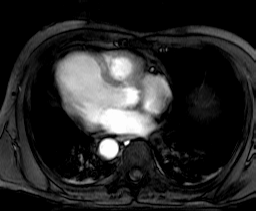

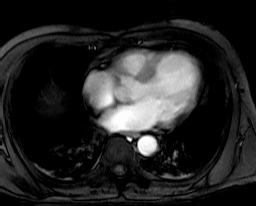

Collection mr consists of three images (see Figure 4.3) taken from the

same patient using magnetic resonance tomography. Images are 8 bit

grayscale with pixel values between 0 and 255 and a size of 256x211.

Figure 4.3 Sample collection mr

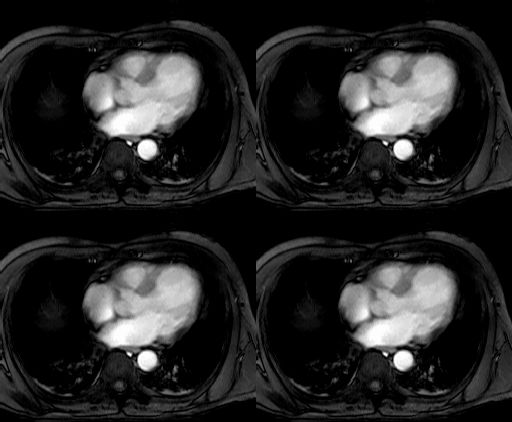

4.4.2. Collection mr2

Collection mr2 consists of only one image, namely the first image of

collection mr (Figure 4.4). Hence, it is also 8 bit grayscale with

size 256x211.

Figure 4.4 Sample collection mr2

4.4.3. Collection rgb

The last example collection, rgb, contains one item, a picture of the

anthur flower (Figure 4.5). It is an RGB image of size 400x344 where

each pixel is composed of three 8 bit integer components for the red, green, and

blue component, resp.

Figure 4.5 The collection rgb

4.5. Type Definition Using rasql

4.5.1. Overview

Every instance within a database is described by its data type (i.e., there is exactly one data type to which an instance belongs; conversely, one data type can serve to describe an arbitrary number of instances). Each database contains a self-contained set of such type definitions; no other type information, external to a database, is needed for database access.

Types in rasdaman establish a 3-level hierarchy:

Cell types can be atomic base types (such as char or float) or composite (“struct”) types such as red / green / blue color pixels.

Array types define arrays over some atomic or struct cell type and a spatial domain.

Set types describe sets of arrays of some particular array type.

Types are identified by their name which must be unique within a database and not exceed length of 200 characters. Like any other identifier in rasql queries, type names are case-sensitive, consist of only letters, digits, or underscore, and must start with a letter.

4.5.2. Cell types

4.5.2.1. Atomic types

The set of standard atomic types, which is generated during creation of a database, materializes the base types defined in the ODMG standard (cf. Table 4.1).

type name |

size |

description |

|---|---|---|

|

1 bit 2 |

true (nonzero value), false (zero value) |

|

8 bit |

signed integer |

|

8 bit |

unsigned integer |

|

16 bit |

signed integer |

|

16 bit |

unsigned integer |

|

32 bit |

signed integer |

|

32 bit |

unsigned integer |

|

32 bit |

single precision floating point |

|

64 bit |

double precision floating point |

|

32 bit |

complex of 16 bit signed integers |

|

64 bit |

complex of 32 bit signed integers |

|

64 bit |

single precision floating point complex |

|

128 bit |

double precision floating point complex |

4.5.2.2. Composite types

More complex, composite cell types can be defined arbitrarily, based on the system-defined atomic types. The syntax is as follows:

create type typeName

as (

attrName_1 atomicType_1,

...

attrName_n atomicType_n

)

Attribute names must be unique within a composite type, otherwise an

exception is thrown. No other type with the name typeName may pre-exist

already.

4.5.2.3. Example

An RGB pixel type can be defined as

create type RGBPixel

as (

red char,

green char,

blue char

)

4.5.3. Array types

An marray (“multidimensional array”) type defines an array type through its cell type (see Cell types) and a spatial domain.

4.5.3.1. Syntax

The syntax for creating an marray type is as below. There are two variants, corresponding to the dimensionality specification alternatives described above:

create type typeName

as baseTypeName mdarray namedMintervalExp

where baseTypeName is the name of a defined cell type (atomic or composite)

and namedMintervalExp is a multidimensional interval specification as described

in the following section.

Alternatively, a composite cell type can be indicated in-place:

create type typeName

as (

attrName_1 atomicType_1,

...

attrName_n atomicType_n

) mdarray namedMintervalExp

No type (of any kind) with name typeName may pre-exist already,

otherwise an exception is thrown.

Attribute names must be unique within a composite type, otherwise an exception is thrown.

4.5.3.2. Spatial domain

Dimensions and their extents are specified by providing an axis name for each dimension and, optionally, a lower and upper bound:

[ a_1 ( lo_1 : hi_1 ), ... , a_d ( lo_d : hi_d ) ]

[ a_1 , ... , a_d ]

where d is a positive integer number, a_i are identifiers, and lo_1

and hi_1 are integers such that lo_1 \(\le\) hi_1. Both lo_1

and hi_1 can be an asterisk (*) instead of a number, in which case no

limit in the particular direction of the axis will be enforced. If the bounds

lo_1 and hi_1 on a particular axis are not specified, they are assumed

to be equivalent to *.

Axis names must be unique within a domain specification, otherwise an exception is thrown.

Currently axis names are ignored and cannot be used in queries yet.

4.5.3.3. Examples

The following statement defines a 2-D RGB image, based on the definition of

RGBPixel as shown above:

create type RGBImage

as RGBPixel mdarray [ x ( 0:1023 ), y ( 0:767 ) ]

An 2-D image without any extent limitation can be defined through:

create type UnboundedImage

as RGBPixel mdarray [ x, y ]

which is equivalent to

create type UnboundedImage

as RGBPixel mdarray [ x ( *:* ), y ( *:* ) ]

Selectively we can also limit only the bounds on the x axis for example:

create type PartiallyBoundedImage

as RGBPixel mdarray [ x ( 0 : 1023 ), y ]

4.5.4. Set types

A set type defines a collection of arrays sharing the same marray type. Additionally, it can indicate that some cell values in these arrays are null. Null is a special marker commonly used in databases to indicate non-existing data values. In rasdaman, it means that in some array cell there is no value that can be used for anything. A variety of reasons exist for such missing values: a sensor detected an erroneous reading, no value was provided at all (maybe due to transmission problems), no values are applicable to this position at all (such as sea surface temperature measurements on dry land positions), etc. As arrays by definition have a value for every position inside the array spatial domain, a “placeholder” must exist which reserves the place (thereby not disturbing array order) while indicating that the value is not to be used. We call such cells null values.

In rasdaman two ways exist to model null values:

by keeping a flag (called “null flag” or “null mask”) for each cell indicating whether it is null or not; this is the common approach in databases;

by designating one or more of the possible values of a cell as representing a null value; we call the set of these possible values the “null set”; this is the common way of representing nulls in sensor data acquisition.

Starting with version 10.0 both methods are supported by rasdaman in a compatible manner. The null set is important for compatibility with sensor data encoded in various formats, while the null mask is crucial for producing accurate results during query processing.

This section explains how to specify null values in the array set definition. Dynamically setting null set and mask in an expression and null behavior in operations is explained in Null Values.

4.5.4.1. Syntax

createSetType:

create type setTypeName

as set '(' marrayTypeName (nullClause)? ')'

nullClause:

null values nullSet

| null values { nullSet, nullSet* }

nullSet: [ nullSetMember, nullSetMember* ]

nullSetMember:

number : number

| "*" : number

| number : "*"

| number

where marrayTypeName is the name of a defined marray type and nullClause

is an optional specification of a set of values to be treated as nulls.

No type with the name setTypeName may pre-exist already.

4.5.4.2. Semantics

The optional nullClause allows associating N null sets with the created set

type. Let B be the base type of marrayTypeName. The number of null sets N

can be

1, in which case the single null set applies to each band of B

K, where K is the number of bands defined by the composite type B; in this case the K null sets are enclosed in braces

Any other case results in an error.

A cell value of an array band is a null value if it matches any of the

nullSetMember in the null set defined by the nullClause. Each

nullSetMember expression is matched as follows depending on the syntax used:

lo:hi- any value between and inclusive lo and hi is a null value; lo and hi are numbers such that lo ≤ hi, and conform to the base type defined by the set type;*:hi- any value lower than or equal to hi is a null value;lo:*- any value greater than or equal to lo is a null value;x- any value equal to x is a null value. For floating-point data it is recommended to always specify sufficiently large intervals to include neighboring numbers generated through rounding errors, instead of single numbers with this variant.For floating-point base type, x can additionally be set to NaN with

nanfor base type double, andnanffor base type float.

All lo, hi, and x in the null set must be within the range of values supported

by the base type of marrayTypeName, e.g. 0 to 255 for base type char;

otherwise a type error will be thrown.

4.5.4.3. Examples

The following statement defines a set type of 2-D RGB images, based on the

definition of RGBImage:

create type RGBSet

as set ( RGBImage )

If values 0, 253, 254, and 255 are to be considered null values, this can be specified as follows:

create type RGBSet

as set ( RGBImage null values [ 0, 253 : 255 ] )

Note that these null values will apply equally to every band.

As the cell type in this case is char (possible values between 0 and 255), the type can be equivalently specified like this:

create type RGBSet

as set ( RGBImage null values [ 0, 253 : * ] )

In the first band values equal to 0 are null values, while in the second and third bands null values are those equal to 255:

create type RGBSetSeparateNullValues

as set ( RGBImage null values { [0], [255], [255] } )

With the set type below, values which are nan are null values (nanf is the float constant, while nan is the double constant):

create type FloatSetNanNullValue

as set ( FloatImage null values [nanf] )

create type DoubleSetNanNullValue

as set ( DoubleImage null values [nan] )

4.5.5. Drop type

A type definition can be dropped (i.e., deleted from the database) if it is not in use. This is the case if both of the following conditions hold:

The type is not used in any other type definition.

There are no array instances existing which are based, directly or indirectly, on the type on hand.

Further, atomic base types (such as char) cannot be deleted.

Drop type syntax

drop type typeName

4.5.6. List available types

A list of all types defined in the database can be obtained in textual

form, adhering to the rasql type definition syntax. This is done by

querying virtual collections (similar to the virtual collection

RAS_collNameS).

Technically, the output of such a query is a list of 1-D char arrays,

each one containing one type definition.

4.5.6.1. Syntax

select typeColl from typeColl

where typeColl is one of

RAS_STRUCT_TYPESfor struct typesRAS_MARRAY_TYPESfor array typesRAS_SET_TYPESfor set typesRAS_TYPESfor union of all types

Note

Collection aliases can be used, such as:

select t from RAS_STRUCT_TYPES as t

No operations can be performed on the output array.

4.5.6.2. Example output

A struct types result may look like this when printed:

create type RGBPixel

as ( red char, green char, blue char )

create type TestPixel

as ( band1 char, band2 char, band3 char )

create type GeostatPredictionPixel

as ( prediction float, variance float )

An marray types result may look like this when printed:

create type GreyImage

as char mdarray [ x, y ]

create type RGBCube

as RGBPixel mdarray [ x, y, z ]

create type XGAImage

as RGBPixel mdarray [ x ( 0 : 1023 ), y ( 0 : 767 ) ]

A set types result may look like this when printed:

create type GreySet

as set ( GreyImage )

create type NullValueTestSet

as set ( NullValueArrayTest null values [5:7] )

An all types result will print combination of all struct types, marray types, and set types results.

4.5.7. Changing types

The type of an existing collection can be changed to another type through

the alter statement.

The new collection type must be compatible with the old one, which means:

same cell type

same dimensionality

no domain shrinking

Changes are allowed, for example, in the null values.

Alter type syntax

alter collection collName

set type collType

where

collName is the name of an existing collection

collType is the name of an existing collection type

Usage notes

The collection does not need to be empty, i.e. it may contain array objects.

Currently, only set (i.e., collection) types can be modified.

Example

Update the set type of a collection Bathymetry to a new set type that

specifies null values:

alter collection Bathymetry

set type BathymetryWithNullValues

4.6. Query Execution with rasql

The rasdaman toolkit offers essentially a couple of ways to communicate with a database through queries:

By submitting queries via command line using rasql; this tool is covered in this section.

By writing a C++, Java, or Python application that uses the rasdaman APIs (raslib, rasj, or rasdapy3 respectively). See the rasdaman API guides for further details.

The rasql tool accepts a query string (which can be parametrised as explained in the API guides), sends it to the server for evaluation, and receives the result set. Results can be displayed in alphanumeric mode, or they can be stored in files.

4.6.1. Examples

For the user who is familiar with command line tools in general and the rasql query language, we give a brief introduction by way of examples. They outline the basic principles through common tasks.

Create a collection

testof typeGreySet(note the explicit setting of userrasadmin; rasql’s default userrasguestby default cannot write):rasql -q "create collection test GreySet" \ --user rasadmin --passwd rasadmin

Print the names of all existing collections:

rasql -q "select r from RAS_collNameS as r" \ --out string

Export demo collection

mrinto TIFF files rasql_1.tif, rasql_2.tif, rasql_3.tif (note the escaped double-quotes as required by shell):rasql -q "select encode(m, \"tiff\") from mr as m" --out file

Import TIFF file myfile into collection

mras new image (note the different query string delimiters to preserve the$character!):rasql -q 'insert into mr values decode($1)' \ -f myfile --user rasadmin --passwd rasadmin

Put a grey square into every mr image:

rasql -q "update mr as m set m[0:10,0:10] \ assign marray x in [0:10,0:10] values 127c" \ --user rasadmin --passwd rasadmin

Verify result of update query by displaying pixel values as hex numbers:

rasql -q "select m[0:10,0:10] from mr as m" --out hex

4.6.2. Invocation syntax

Rasql is invoked as a command with the query string as parameter. Additional parameters guide detailed behavior, such as authentication and result display.

Any errors or other diagnostic output encountered are printed; transactions are aborted upon errors.

Usage:

rasql [--query q|-q q] [options]

Options:

- -h, --help

show command line switches

- -q, --query q

query string to be sent to the rasdaman server for execution

- -f, --file f

file name for upload through $i parameters within queries; each $i needs its own file parameter, in proper sequence 4. Requires –mdddomain and –mddtype

- --content

display result, if any (see also –out and –type for output formatting)

- --out t

use display method t for cell values of result MDDs where t is one of

none: do not display result item contents

file: write each result MDD into a separate file

string: print result MDD contents as char string (only for 1D arrays of type char)

hex: print result MDD cells as a sequence of space-separated hex values

formatted: reserved, not yet supported

Option –out implies –content; default: none

- --outfile of

file name template for storing result images (ignored for scalar results). Use ‘%d’ to indicate auto numbering position, like with printf(1). For well-known file types, a proper suffix is appended to the resulting file name. Implies –out file. (default: rasql_%d)

- --mdddomain d

MDD domain, format: ‘[x0:x1,y0:y1]’; required only if –file specified and file is in data format r_Array; if input file format is some standard data exchange format and the query uses a convertor, such as encode($1,”tiff”), then domain information can be obtained from the file header.

- --mddtype t

input MDD type (must be a type defined in the database); required only if –file specified and file is in data format r_Array; if input file format is some standard data exchange format and the query uses a convertor, such as decode($1,”tiff”), then type information can be obtained from the file header.

- --type

display type information for results

- -s, --server h

rasdaman server name or address (default: localhost)

- -p, --port p

rasdaman port number (default: 7001)

- -d, --database db

name of database (default: RASBASE)

- --user u

name of user (default: rasguest)

- --passwd p

password of user (default: rasguest). If this option is not specified, rasql will try to find a matching password in file

~/.raspassif it exists. This text file should containusername:passwordpairs, each one on a separate line, and it must have permissions u=rw (0600) or less (that is, at most read/writeable for the owner system user). If:characters appear in the password or username, they must be escaped with a backward slash\; e.g.username:pass\:wordwill be interpreted asusernamewith passwordpass:word. In case of multiple lines with matching username, rasql will pick the first one.- --quiet

print no ornament messages, only results and errors

4.7. Overview: General Query Format

4.7.1. Basic Query Mechanism

rasql provides declarative query functionality on collections (i.e., sets) of MDD stored in a rasdaman database. The query language is based on the SQL-92 standard and extends the language with high-level multidimensional operators.

The general query structure is best explained by means of an example. Consider the following query:

select mr[100:150,40:80] / 2

from mr

where some_cells( mr[120:160, 55:75] > 250 )

In the from clause, mr is specified as the working collection on which all evaluation will take place. This name, which serves as an “iterator variable” over this collection, can be used in other parts of the query for referencing the particular collection element under inspection.

Optionally, an alias name can be given to the collection (see syntax below) - however, in most cases this is not necessary.

In the where clause, a condition is phrased. Each collection element in turn is probed, and upon fulfillment of the condition the item is added to the query result set. In the example query, part of the image is tested against a threshold value.

Elements in the query result set, finally, can be “post-processed” in the select clause by applying further operations. In the case on hand, a spatial extraction is done combined with an intensity reduction on the extracted image part.

In summary, a rasql query returns a set fulfilling some search condition just as is the case with conventional SQL and OQL. The difference lies in the operations which are available in the select and where clause: SQL does not support expressions containing multidimensional operators, whereas rasql does.

Syntax

select resultList

from collName [ as collAlias ]

[ , collName [ as collAlias ] ]*

[ where booleanExp ]

The complete rasql query syntax can be found in the Appendix.

4.7.2. Select Clause: Result Preparation

Type and format of the query result are specified in the select part of the query. The query result type can be multidimensional, a struct or atomic (i.e., scalar), or a spatial domain / interval. The select clause can reference the collection iteration variable defined in the from clause; each array in the collection will be assigned to this iteration variable successively.

Example

Images from collection mr, with pixel intensity reduced by a factor 2:

select mr / 2

from mr

4.7.3. From Clause: Collection Specification

In the from clause, the list of collections to be inspected is specified, optionally together with a variable name which is associated to each collection. For query evaluation the cross product between all participating collections is built which means that every possible combination of elements from all collections is evaluated. For instance in case of two collections, each MDD of the first collection is combined with each MDD of the second collection. Hence, combining a collection with n elements with a collection containing m elements results in n*m combinations. This is important for estimating query response time.

Example

The following example subtracts each MDD of collection mr2 from each MDD of collection mr (the binary induced operation used in this example is explained in Binary Induction).

select mr - mr2

from mr, mr2

Using alias variables a and b bound to collections mr and mr2, resp., the same query looks as follows:

select a - b

from mr as a, mr2 as b

Cross products

As in SQL, multiple collections in a from clause such as

from c1, c2, ..., ck

are evaluated to a cross product. This means that the select clause is evaluated for a virtual collection that has n1 * n2 * … * nk elements if c1 contains n1 elements, c2 contains n2 elements, and so forth.

Warning: This holds regardless of the select expression - even if you mention only say c1 in the select clause, the number of result elements will be the product of all collection sizes!

4.7.4. Where Clause: Conditions

In the where clause, conditions are specified which members of the query result set must fulfil. Like in SQL, predicates are built as boolean expressions using comparison, parenthesis, functions, etc. Unlike SQL, however, rasql offers mechanisms to express selection criteria on multidimensional items.

Example

We want to restrict the previous result to those images where at least one difference pixel value is greater than 50 (see Binary Induction):

select mr - mr2

from mr, mr2

where some_cells( mr - mr2 > 50 )

4.8. Constants

4.8.1. Atomic Constants

Atomic constants are written in standard C/C++ style. If necessary constants are augmented with a one or two letter postfix to unambiguously determine its data type (Table 4.2).

The default for integer constants is l, and for floating-point it is d.

Specifiers are case insensitive.

Example

25c

-1700L

.4e-5D

Note

Boolean constants true and false are unique, so they do not need a type specifier.

postfix |

type |

|---|---|

o |

octet |

c |

char |

s |

short |

us |

unsigned short |

l |

long |

ul |

unsigned long |

f |

float |

d |

double |

4.8.1.1. Complex numbers

Special built-in types are CFloat32 and CFloat64 for single and double

precision complex numbers, resp, as well as CInt16 and CInt32 for

signed integer complex numbers.

Syntax

complex( re, im )

where re and im are integer or floating point expressions. The resulting constant type is summarized on the table below. Further re/im type combinations are not supported.

type of re |

type of im |

type of complex constant |

|---|---|---|

short |

short |

CInt16 |

long |

long |

CInt32 |

float |

float |

CFloat32 |

double |

double |

CFloat64 |

Example

complex( .35d, 16.0d ) -- CFloat64

complex( .35f, 16.0f ) -- CFloat32

complex( 5s, 16s ) -- CInt16

complex( 5, 16 ) -- CInt32

Component access

The complex parts can be extracted with .re and .im; more details

can be found in the Induced Operations section.

4.8.1.2. kB, MB, and GB

Bytes can be conveniently specified as kilobytes, megabytes, or gigabytes with

suffixes kB, MB, or GB. During parsing they are automatically

converted to bytes. This is relevant for example when specifying limits on data

access / download (see Trigger management).

Example

1.5kB = 1500

100MB = 100000

4GB = 4000000000

4.8.1.3. NaN and Inf

The following special floating-point constants are supported as well:

Constant |

Type |

|---|---|

NaN |

double |

NaNf |

float |

Inf |

double |

Inff |

float |

4.8.2. Composite Constants

Composite constants resemble records (“structs”) over atomic constants or other records. Notation is as follows.

Syntax

struct { const_0, ..., const_n }

where const_i must be of atomic or complex type, i.e. nested structs are not supported.

Example

struct{ 0c, 0c, 0c } -- black pixel in an RGB image, for example

struct{ 1l, true } -- mixed component types

Component access

See Struct Component Selection for details on how to extract the constituents from a composite value.

4.8.3. Array Constants

Small array constants can be indicated literally. An array constant consists of the spatial domain specification (see Spatial Domain) followed by the cell values whereby value sequencing is as follow. The array is linearized in a way that the lowest dimension 5 is the “outermost” dimension and the highest dimension 6 is the “innermost” one. Within each dimension, elements are listed sequentially, starting with the lower bound and proceeding until the upper bound. List elements for the innermost dimension are separated by comma “,”, all others by semicolon “;”.

The exact number of values as specified in the leading spatial domain expression must be provided. All constants must have the same type; this will be the result array’s base type.

Syntax

< mintervalExp

scalarList_0 ; ... ; scalarList_n ; >

where scalarList is defined as a comma separated list of literals:

scalar_0, scalar_1, ... scalar_n ;

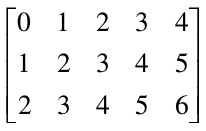

Example

< [-1:1,-2:2] 0, 1, 2, 3, 4;

1, 2, 3, 4, 5;

2, 3, 4, 5, 6 >

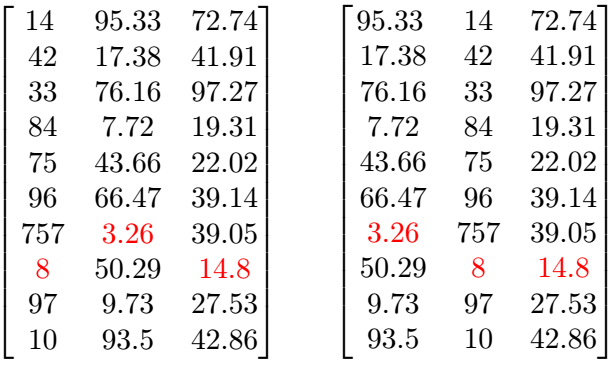

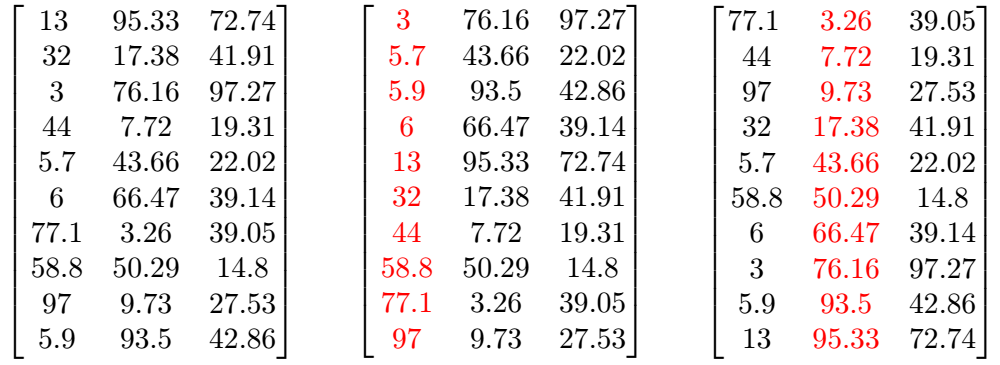

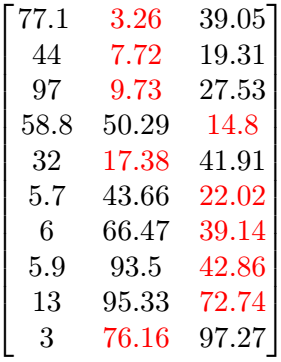

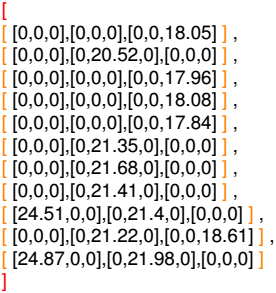

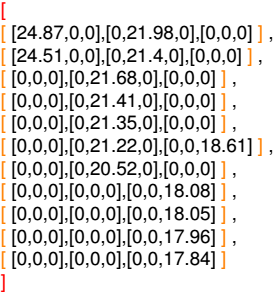

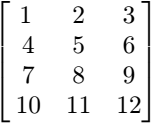

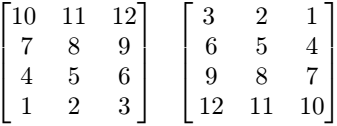

This constant expression defines the following matrix:

4.8.4. Object identifier (OID) Constants

OIDs serve to uniquely identify arrays (see Linking MDD with Other Data). Within a database, the OID of an array is an integer number. To use an OID outside the context of a particular database, it must be fully qualified with the system name where the database resides, the name of the database containing the array, and the local array OID.

The worldwide unique array identifiers, i.e., OIDs, consist of three components:

A string containing the system where the database resides (system name),

A string containing the database (“base name”), and

A number containing the local object id within the database.

The full OID is enclosed in ‘<’ and ‘>’ characters, the three name

components are separated by a vertical bar ‘|’.

System and database names obey the naming rules of the underlying operating system and base DBMS, i.e., usually they are made up of lower and upper case characters, underscores, and digits, with digits not as first character. Any additional white space (space, tab, or newline characters) inbetween is assumed to be part of the name, so this should be avoided.

The local OID is an integer number.

Syntax

< systemName | baseName | objectID >

objectID

where systemName and baseName are string literals and objectID is an integerExp.

Example

< acme.com | RASBASE | 42 >

42

4.8.5. String constants

A sequence of characters delimited by double quotes is a string.

Syntax

"..."

Example

SELECT encode(coll, "png") FROM coll

4.8.6. Collection Names

Collections are named containers for sets of MDD objects (see Linking MDD with Other Data). A collection name is made up of lower and upper case characters, underscores, and digits. Depending on the underlying base DBMS, names may be limited in length, and some systems (rare though) may not distinguish upper and lower case letters.

Operations available on name constants are string equality “=” and

inequality “!=”.

4.9. Spatial Domain Operations

4.9.1. One-Dimensional Intervals

One-dimensional (1D) intervals describe non-empty, consecutive sets of integer numbers, described by integer-valued lower and upper bound, resp.; negative values are admissible for both bounds. Intervals are specified by indicating lower and upper bound through integer-valued expressions according to the following syntax:

The lower and upper bounds of an interval can be extracted using the functions .lo and .hi.

Syntax

integerExp_1 : integerExp_2

intervalExp.lo

intervalExp.hi

A one-dimensional interval with integerExp_1 as lower bound and integerExp_2 as upper bound is constructed. The lower bound must be less or equal to the upper bound. Lower and upper bound extractors return the integer-valued bounds.

Examples

An interval ranging from -17 up to 245 is written as:

-17 : 245

Conversely, the following expression evaluates to 245; note the parenthesis to enforce the desired evaluation sequence:

(-17 : 245).hi

4.9.2. Multidimensional Intervals

Multidimensional intervals (m-intervals) describe areas in space, or better said: point sets. These point sets form rectangular and axis-parallel “cubes” of some dimension. An m-interval’s dimension is given by the number of 1D intervals it needs to be described; the bounds of the “cube” are indicated by the lower and upper bound of the respective 1D interval in each dimension.

From an m-interval, the intervals describing a particular dimension can

be extracted by indexing the m-interval with the number of the desired

dimension using the operator [].

Dimension counting in an m-interval expression runs from left to right, starting with lowest dimension number 0.

Syntax

[ intervalExp_0 , ... , intervalExp_n ]

[ intervalExp_0 , ... , intervalExp_n ] [integerExp ]

An (n+1)-dimensional m-interval with the specified intervalExp_i is built where the first dimension is described by intervalExp_0, etc., until the last dimension described by intervalExp_n.

Example

A 2-dimensional m-interval ranging from -17 to 245 in dimension 1 and from 42 to 227 in dimension 2 can be denoted as

[ -17 : 245, 42 : 227 ]

The expression below evaluates to [42:227].

[ -17 : 245, 42 : 227 ] [1]

...whereas here the result is 42:

[ -17 : 245, 42 : 227 ] [1].lo

4.10. Array Operations

As we have seen in the last Section, intervals and m-intervals describe n-dimensional regions in space.

Next, we are going to place information into the regular grid established by the m-intervals so that, at the position of every integer-valued coordinate, a value can be stored. Each such value container addressed by an n-dimensional coordinate will be referred to as a cell. The set of all the cells described by a particular m-interval and with cells over a particular base type, then, forms the array.

As before with intervals, we introduce means to describe arrays through expressions, i.e., to derive new arrays from existing ones. Such operations can change an arrays shape and dimension (sometimes called geometric operations), or the cell values (referred to as value-changing operations), or both. In extreme cases, both array dimension, size, and base type can change completely, for example in the case of a histogram computation.

First, we describe the means to query and manipulate an array’s spatial domain (so-called geometric operations), then we introduce the means to query and manipulate an array’s cell values (value-changing operations).

Note that some operations are restricted in the operand domains they accept, as is common in arithmetics in programming languages; division by zero is a common example. Arithmetic Errors and Other Exception Situations contains information about possible error conditions, how to deal with them, and how to prevent them.

4.10.1. Spatial Domain

The m-interval covered by an array is called the array’s spatial domain. Function sdom() allows to retrieve an array’s current spatial domain. The current domain of an array is the minimal axis-parallel bounding box containing all currently defined cells.

As arrays can have variable bounds according to their type definition (see Array types), their spatial domain cannot always be determined from the schema information, but must be recorded individually by the database system. In case of a fixed-size array, this will coincide with the schema information, in case of a variable-size array it delivers the spatial domain to which the array has been set. The operators presented below and in Update allow to change an array’s spatial domain. Notably, a collection defined over variable-size arrays can hold arrays which, at a given moment in time, may differ in the lower and/or upper bounds of their variable dimensions.

Syntax

sdom( mddExp )

Function sdom() evaluates to the current spatial domain of mddExp.

Examples

Consider an image a of collection mr. Elements from this collection are defined as having free bounds, but in practice our collection elements all have spatial domain [0 : 255, 0 : 210]. Then, the following equivalences hold:

sdom(a) = [0 : 255, 0 : 210]

sdom(a)[0] = [0 : 255]

sdom(a)[0].lo = 0

sdom(a)[0].hi = 255

4.10.2. Geometric Operations

4.10.2.1. Trimming

Reducing the spatial domain of an array while leaving the cell values unchanged is called trimming. Array dimension remains unchanged. Attempting to extend or intersect the array’s spatial domain will lead to an error; use the extend function in this case.

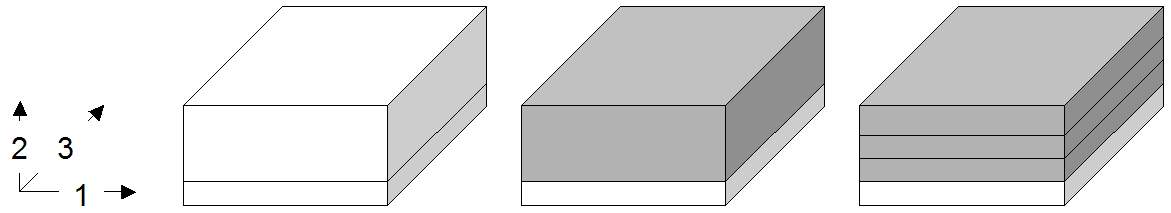

Figure 4.6 Spatial domain modification through trimming (2-D example)

Syntax

mddExp [ mintervalExp ]

Examples

The following query returns cutouts from the area [120: 160 , 55 : 75]

of all images in collection mr (see Figure 4.7).

select mr[ 120:160, 55:75 ]

from mr

Figure 4.7 Trimming result

4.10.2.2. Section

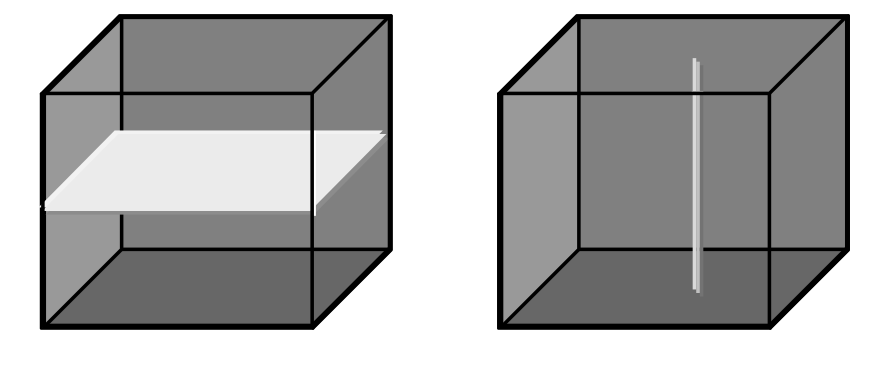

A section allows to extract lower-dimensional layers (“slices”) from an array.

Figure 4.8 Single and double section through 3-D array, yielding 2-D and 1-D sections.

A section is accomplished through a trim expression by indicating the slicing position rather than a selection interval. A section can be made in any dimension within a trim expression. Each section reduces the dimension by one.

Like with trimming, a section must be within the spatial domain of the array, otherwise an error indicating that the subset domain extends outside of the array spatial domain will be thrown.

Syntax

mddExp [ integerExp_0 , ... , integerExp_n ]

This makes sections through mddExp at positions integerExp_i for each dimension i.

Example

The following query produces a 2-D section in the 2nd dimension of a 3-D cube:

select Images3D[ 0:256, 10, 0:256 ]

from Images3D

Note

If a section is done in every dimension of an array, the result is one single cell. This special case resembles array element access in programming languages, e.g., C/C++. However, in rasql the result still is an array, namely one with zero dimensions and exactly one element.

Example

The following query delivers a set of 0-D arrays containing single pixels, namely the ones with coordinate [100,150]:

select mr[ 100, 150 ]

from mr

4.10.2.3. Trim Wildcard Operator “*”

An asterisk “*” can be used as a shorthand for an sdom() invocation in a trim expression; the following phrases all are equivalent:

a [ *:*, *:* ] = a [ sdom(a)[0] , sdom(a)[1] ]

= a [ sdom(a)[0].lo : sdom(a)[0].hi ,

sdom(a)[1].lo : sdom(a)[1].hi ]

An asterisk “*” can appear at any lower or upper bound position within a trim expression denoting the current spatial domain boundary. A trim expression can contain an arbitrary number of such wildcards. Note, however, that an asterisk cannot be used for specifying a section.

Example

The following are valid applications of the asterisk operator:

select mr[ 50:*, *:200 ]

from mr

select mr[ *:*, 10:150 ]

from mr

The next is illegal because it attempts to use an asterisk in a section:

select mr[ *, 100:200 ] -- illegal "*" usage in dimension 0

from mr

Note

It is well possible (and often recommended) to use an array’s spatial domain or part of it for query formulation; this makes the query more general and, hence, allows to establish query libraries. The following query cuts away the rightmost pixel line from the images:

select mr[ *:*, *:sdom(mr)[1].hi - 1 ] -- good, portable

from mr

In the next example, conversely, trim bounds are written explicitly; this query’s trim expression, therefore, cannot be used with any other array type.

select mr[ 0:767, 0:1023 ] -- bad, not portable

from mr

One might get the idea that the last query evaluates faster. This, however, is not the case; the server’s intelligent query engine makes the first version execute at just the same speed.

4.10.2.4. Positionally-independent Subsetting

Rasdaman supports positionally-independent subsetting like in WCPS and SQL/MDA, where for each trim/slice the axis name is indicated as well, e.g.

select mr2[d0(0:100), d1(50)] from mr2

The axis names give a reference to the addressed axes, so the order doesn’t matter anymore. This is equivalent:

select mr2[d1(50), d0(0:100)] from mr2

Furthermore, not all axes have to be specified. Any axes which are not specified default to “:”. For example:

select mr2[d1(50)] from mr2

=

select mr2[d0(*:*), d1(50)] from mr2

The two subset formats cannot be mixed, e.g. this is an error:

select mr2[d0(0:100), 50] from mr2

4.10.2.5. Shifting a Spatial Domain

Rasdaman provides built-in functions to transpose an array: its spatial domain remains unchanged in shape, but all cell contents simultaneously are moved to another location in n-dimensional space. Cell values themselves remain unchanged.

Syntax

shift( mddExp , pointExp ) (1a)

shift_by( mddExp , pointExp ) (1b)

shift_to( mddExp , pointExp ) (2)

The shift functions accept an mddExp and a pointExp and return an array whose spatial domain is shifted either

by the vector given by the pointExp in variants (1a) and (1b), or

to a new origin given by the pointExp in variant (2)

The dimension of pointExp must be equal to the dimension of the mddExp.

Examples

The following expression evaluates to an array with spatial domain

[3:13, 4:24] containing the same values as the original array a.

shift( a[ 0:10, 0:20 ], [ 3, 4 ] )

shift_by( a[ 0:10, 0:20 ], [ 3, 4 ] )

The following example shifts the array operand’s spatial domain

[ 5:15, 100:120 ] to a domain at origin [ 0, 0 ] (i.e.

[ 0:10, 0:20 ])

shift_to( a[ 5:15, 100:120 ], [ 0, 0 ] )

4.10.2.6. Extending a Spatial Domain

Function extend() enlarges a given MDD with the domain specified. The domain for extending must, for every boundary element, be at least as large as the MDD’s domain boundary. The new MDD contains 0 values in the extended part of its domain and the MDD’s original cell values within the MDD’s domain.

Syntax

extend( mddExp , mintervalExp )

The function accepts an mddExp and a mintervalExp and returns an array whose spatial domain is extended to the new domain specified by mintervalExp. The result MDD has the same cell type as the input MDD.

Precondition:

sdom( mddExp ) contained in mintervalExp

Example

Assuming that MDD a has a spatial domain of [0:50, 0:25], the following

expression evaluates to an array with spatial domain [-100:100, -50:50],

a‘s values in the subdomain [0:50, 0:25], and 0 values at the

remaining cell positions.

extend( a, [-100:100, -50:50] )

4.10.2.7. Geographic projection

4.10.2.7.1. Overview

“A map projection is any method of representing the surface of a sphere or other three-dimensional beginEndBody on a plane. Map projections are necessary for creating maps. All map projections distort the surface in some fashion. Depending on the purpose of the map, some distortions are acceptable and others are not; therefore different map projections exist in order to preserve some properties of the sphere-like beginEndBody at the expense of other properties.” (Wikipedia)

Each coordinate tieing a geographic object, map, or pixel to some position on earth (or some other celestial object, for that matter) is valid only in conjunction with the Coordinate Reference System (CRS) in which it is expressed. For 2-D Earth CRSs, a set of CRSs and their identifiers is normatively defined by the OGP Geomatics Committee, formed in 2005 by the absorption into OGP of the now-defunct European Petroleum Survey Group (EPSG). By way of tradition, however, this set of CRS definitions still is known as “EPSG”, and the CRS identifiers as “EPSG codes”. For example, EPSG:4326 references the well-known WGS84 CRS.

4.10.2.7.2. The project() function

Assume an MDD object M and two CRS identifiers C1 and C2 such as

“EPSG:4326”. The project() function establishes an output MDD, with same

dimension as M, whose contents is given by projecting M from CRS C1

into CRS C2.

The project() function comes in several variants based on the provided

input arguments

(1) project( mddExpr, boundsIn, crsIn, crsOut )

(2) project( mddExpr, boundsIn, crsIn, crsOut, resampleAlg )

(3) project( mddExpr, boundsIn, crsIn, boundsOut, crsOut,

widthOut, heightOut )

(4) project( mddExpr, boundsIn, crsIn, boundsOut, crsOut,

widthOut, heightOut, resampleAlg, errThreshold )

(5) project( mddExpr, boundsIn, crsIn, boundsOut, crsOut,

xres, yres)

(6) project( mddExpr, boundsIn, crsIn, boundsOut, crsOut,

xres, yres, resampleAlg, errThreshold )

where

mddExpr- MDD object to be reprojected.boundsIn- geographic bounding box given as a string of comma-separated floating-point values of the format:"xmin, ymin, xmax, ymax".crsIn- geographic CRS as a string. Internally, theproject()function is mapped to GDAL; hence, it accepts the same CRS formats as GDAL:Well Known Text (as per GDAL)

“EPSG:n”

“EPSGA:n”

“AUTO:proj_id,unit_id,lon0,lat0” indicating OGC WMS auto projections

“

urn:ogc:def:crs:EPSG::n” indicating OGC URNs (deprecated by OGC)PROJ.4 definitions

well known names, such as NAD27, NAD83, WGS84 or WGS72.

WKT in ESRI format, prefixed with “ESRI::”

“IGNF:xxx” and “+init=IGNF:xxx”, etc.

Since recently (v1.10), GDAL also supports OGC CRS URLs, OGC’s preferred way of identifying CRSs.

boundsOut- geographic bounding box of the projected output, given in the same format asboundsIn. This can be “smaller” than the input bounding box, in which case the input will be cropped.crsOut- geographic CRS of the result, in same format ascrsIn.widthOut,heightOut- integer grid extents of the result; the result will be accordingly scaled to fit in these extents.xres,yres- axis resolution in target georeferenced units.

resampleAlg- resampling algorithm to use, equivalent to the ones in GDAL:- near

Nearest neighbour (default, fastest algorithm, worst interpolation quality).

- bilinear

Bilinear resampling (2x2 kernel).

- cubic

Cubic convolution approximation (4x4 kernel).

- cubicspline

Cubic B-spline approximation (4x4 kernel).

- lanczos

Lanczos windowed sinc (6x6 kernel).

- average

Average of all non-NODATA contributing pixels. (GDAL >= 1.10.0)

- mode

Selects the value which appears most often of all the sampled points. (GDAL >= 1.10.0)

- max

Selects the maximum value from all non-NODATA contributing pixels. (GDAL >= 2.0.0)

- min

Selects the minimum value from all non-NODATA contributing pixels. (GDAL >= 2.0.0)

- med

Selects the median value of all non-NODATA contributing pixels. (GDAL >= 2.0.0)

- q1

Selects the first quartile value of all non-NODATA contributing pixels. (GDAL >= 2.0.0)

- q3

Selects the third quartile value of all non-NODATA contributing pixels. (GDAL >= 2.0.0)

errThreshold- error threshold for transformation approximation (in pixel units - defaults to 0.125).

Example

The following expression projects the MDD worldMap with bounding box

“-180, -90, 180, 90” in CRS EPSG 4326, into EPSG 54030:

project( worldMap, "-180, -90, 180, 90", "EPSG:4326", "EPSG:54030" )

The next example reprojects a subset of MDD Formosat with geographic

bbox “265725, 2544015, 341595, 2617695” in EPSG 32651, to bbox

“120.630936455 23.5842129067 120.77553782 23.721772322” in EPSG 4326 fit into

a 256 x 256 pixels area. The resampling algorithm is set to bicubic, and the

pixel error threshold is 0.1.

project( Formosat[ 0:2528, 0:2456 ],

"265725, 2544015, 341595, 2617695", "EPSG:32651",

"120.630936455 23.5842129067 120.77553782 23.721772322", "EPSG:4326",

256, 256, cubic, 0.1 )

Limitations

Only 2-D arrays are supported. For multiband arrays, all bands must be of the same cell type.

4.10.2.7.3. Notes

Reprojection implies resampling of the cell values into a new grid, hence usually they will change.

As for the resampling process typically a larger area is required than the reprojected data area itself, it is advisable to project an area smaller than the total domain of the MDD.

Per se, rasdaman is a domain-agnostic Array DBMS and, hence, does not

know about CRSs; specific geo semantics is added by rasdaman’s petascope

layer. However, for the sake of performance, the

reprojection capability – which in geo service practice is immensely important

– is pushed down into rasdaman, rather than doing reprojection in

petascope’s Java code. To this end, the project() function provides rasdaman

with enough information to perform a reprojection, however, without

“knowing” anything in particular about geographic coordinates and CRSs.

One consequence is that there is no check whether this lat/long project is

applied to the proper axis of an array; it is up to the application (usually:

petascope) to handle axis semantics.

One consequence is that there is no check whether this lat/long project is applied to the proper axis of an array; it is up to the application (usually: petascope) to handle axis semantics.

4.10.3. Clipping Operations

Clipping is a general operation covering polygon clipping, linestring

selection, polytope clipping, curtain queries, and corridor queries. Presently,

all operations are available in rasdaman via the clip function.

Further examples of clipping can be found in the systemtest for clipping.

4.10.3.1. Polygons

4.10.3.1.1. Syntax

select clip( c, polygon(( list of WKT points )) )

from coll as c

The input consists of an MDD expression and a list of WKT points, which determines the set of vertices of the polygon. Polygons are assumed to be closed with positive area, so the first vertex need not be repeated at the end, but there is no problem if it is. The algorithms used support polygons with self-intersection and vertex re-visitation.

Polygons may have interiors defined, such as

polygon( ( 0 0, 9 0, 9 9, 0 9, 0 0),

( 3 3, 7 3, 7 7, 3 7, 3 3 ) )

which would describe the annular region of the box [0:9,0:9] with the

interior box [3:7,3:7] removed. In this case, the interior polygons (there

may be many, as it forms a list) must not intersect the exterior polygon.

The clip bounding box must intersect the spatial domain of the MDD. The clipped areas that extend outside of the MDD will be set to null values.

4.10.3.2. Multipolygons

4.10.3.2.1. Syntax

select clip( c, multipolygon((( list of WKT points )),(( list of WKT points ))*) )

from coll as c

The input consists of an MDD expression and a list of polygons defined by list of WKT points. The assumptions about polygons are same as the ones for Polygon.

4.10.3.2.2. Return type

The output of a polygon query is a new array with dimensions corresponding to the bounding box of the polygon vertices. In case of Multipolygon, the new array have dimensions corresponding to closure of bounding boxes of every individual polygon, which domain intersects the collection’s spatial domain. The data in the array consists of null values where cells lie outside the polygon or MDD (or 0 values if no null values are associated with the array) and otherwise consists of the data in the collection where the corresponding cells lie inside the polygon. This could change the null values stored outside the polygon from one null value to another null value, in case a range of null values is used. By default, the first available null value will be utilized for the complement of the polygon.

An illustrative example of a polygon clipping is the right triangle with

vertices located at (0,0,0), (0,10,0) and (0,10,10), which can be

selected via the following query:

select clip( c, polygon((0 0 0, 0 10 0, 0 10 10)) )

from coll as c

4.10.3.2.3. Oblique polygons with subspacing

In case all the points in a polygon are coplanar, in some MDD object d of

higher dimension than 2, users can first perform a subspace operation on d

which selects the 2-D oblique subspace of d containing the polygon. For

example, if the polygon is the triangle polygon((0 0 0, 1 1 1, 0 1 1, 0 0 0)),

this triangle can be selected via the following query:

select clip( subspace(d, (0 0 0, 1 1 1, 0 1 1) ),

polygon(( 0 0, 1 1 , 0 1 , 0 0)) )

from coll as d

where the result of subspace(d) is used as the domain of the polygon. For

more information look in Subspace Queries.

4.10.3.3. Linestrings

4.10.3.3.1. Syntax

select clip( c, linestring( list of WKT points ) ) [ with coordinates ]

from coll as c

The input parameter c refers to an MDD expression of dimension equal to the

dimension of the points in the list of WKT points. The list of WKT points

consists of parameters such as linestring(0 0, 19 -3, 19 -21), which would

describe the 3 endpoints of 2 line segments sharing an endpoint at 19 -3, in

this case.

4.10.3.3.2. Return type

The output consists of a 1-D MDD object consisting of the points selected along

the path drawn out by the linestring. The points are selected using a Bresenham

Line Drawing algorithm which passes through the spatial domain in the MDD

expression c, and selects values from the stored object. In case the

linestring spends some time outside the spatial domain of c, the first

null value will be used to fill the result of the linestring, just as in polygon

clipping.

When with coordinates is specified, in addition to the original cell values

the coordinate values are also added to the result MDD. The result cell type for

clipped MDD of dimension N will be composite of the following form:

If the original cell type

elemtypeis non-composite:{ long d1, ..., long dN, elemtype value }

Otherwise, if the original cell type is composite of

Mbands:{ long d1, ..., long dN, elemtype1 elemname1, ..., elemetypeM elemnameM }

4.10.3.3.3. Example

Select a Linestring from rgb data with coordinates. First two values of each

cell in the result are the x/y coordinates, with following values (three in this

case for RGB data) are the cell values of the clip operation to which

with coordinates is applied.

select encode(

clip( c, linestring(0 19, 19 24, 12 17) ) with coordinates, "json")

from rgb as c

Result:

["0 19 119 208 248","1 19 119 208 248","2 20 119 208 248", ...]

The same query without specifying with coordinates:

select encode(

clip( c, linestring(0 19, 19 24, 12 17) ), "json")

from rgb as c

results in

["119 208 248","119 208 248","119 208 248", ...]

4.10.3.4. Curtains

4.10.3.4.1. Syntax

select clip( c, curtain( projection(dimension pair),

polygon(( ... )) ) )

from coll as c

and

select clip( c, curtain( projection(dimension list),

linestring( ... ) ) )

from coll as c

The input in both variants consists of a dimension list corresponding to the

dimensions in which the geometric object, either the polygon or the linestring,

is defined. The geometry object is defined as per the above descriptions;

however, the following caveat applies: the spatial domain of the mdd expression

is projected along the projection dimensions in the projection(dimension

list). For a polygon clipping, which is 2-D, the dimension list is a pair of

values such as projection(0, 2) which would define a polygon in the axial

dimensions of 0 and 2 of the MDD expression c. For instance, if the spatial

domain of c is [0:99,0:199,0:255], then this would mean the domain upon

which the polygon is defined would be [0:99,0:255].

4.10.3.4.2. Return type

The output consists of a polygon clipping at every slice of the spatial domain

of c. For instance, if the projection dimensions of (0, 2) are used for

the same spatial domain of c above, then a polygon clipping is performed at

every slice of c of the form [0:99,x,0:255] and appended to the result

MDD object, where there is a slice for each value of x in [0:199].

4.10.3.5. Corridors

4.10.3.5.1. Syntax

select clip( c, corridor( projection(dimension pair),

linestring( ... ),

polygon(( ... )) ) )

from coll as c

and

select clip( c, corridor( projection(dimension pair),

linestring( ... ),

polygon(( ... )),

discrete ) )

from coll as c

The input consists of a dimension list corresponding to the dimensions in which the geometric object, in this case a polygon, is defined. The linestring specifies the path along which this geometric object is integrated. One slice is sampled at every point, and at least the first point of the linestring should be contained within the polygon to ensure a meaningful result (an error is thrown in case it is not). There is an optional discrete flag which modifies the output by skipping the extrapolation of the linestring data to interior points.

4.10.3.5.2. Return type

The output consists of a polygon clipping at every slice of the spatial domain

of c translated along the points in the linestring, where the first axis of

the result is indexed by the linestring points and the latter axes are indexed

by the mask dimensions (in this case, the convex hull of the polygon). The

projection dimensions are otherwise handled as in curtains; it is the spatial

offsets given by the linestring coordinates which impact the changes in the

result. In the case where the discrete parameter was utilized, the output is

indexed by the number of points in the linestring description in the query and

not by the extrapolated linestring, which uses a Bresenham algorithm to find

the grid points in between.

4.10.3.6. Subspace Queries

Here we cover the details of subspace queries in rasdaman. Much like slicing via a query such as

select c[0:9,1,0:9] from collection as c

the subspace query parameter allows users to extract a lower-dimensional dataset from an existing collection. It is capable of everything that a slicing query is capable of, and more. The limitation of slicing is that the selected data must lie either parallel or perpendicular to existing axes; however, with subspacing, users can arbitrarily rotate the axes of interest to select data in an oblique fashion. This control is exercised by defining an affine subspace from a list of vertices lying in the datacube. Rasdaman takes these points and finds the unique lowest-dimensional affine subspace containing them, and outputs the data closest to this slice, contained in the bounding box of the given points, into the resulting array.

Structure of the query:

select clip( c, subspace(list of WKT points) )

from coll as c

We can illustrate the usage with an example of two queries which are identical in output:

select clip( c, subspace(0 0 0, 1 0 0, 0 0 1) ) from coll as c

select c[0:1,0,0:1] from coll as c

This example will result in 1D array of sdom [0:99]:

select clip( c, subspace(19 0, 0 99) ) from test_rgb as c

This example will result in a a 2D array of sdom [0:7,0:19]:

select clip( c, subspace(0 0 0, 0 19 0, 7 0 7) )

from test_grey3d as c

and it will consist of the best integer lattice points reachable by the vectors

(1,0,1) and (0,1,0) within the bounding box domain of [0:7,0:19,0:7]

in test_grey3d.

Generally speaking, rasdaman uses the 1st point as a basepoint for an affine

subspace containing all given points, constructs a system of equations to

determine whether or not a point is in that subspace or not, and then searches

the bounding box of the given points for solutions to the projection operator

which maps [0:7,0:19,0:7] to [0:7,0:19]. The result dimensions are

chosen such that each search yields a unique real solution, and then rasdaman

rounds to the nearest integer cell before adding the value stored in that cell

to the result object.

Some mathematical edge cases:

Because of arithmetic on affine subspaces, the following two queries are fundamentally identical to rasdaman:

select clip( c, subspace(0 0 0, 1 1 0, 0 1 0) )

from test_grey3d as c

select clip( c, subspace(0 0 0, 1 0 0, 0 1 0) )

from test_grey3d as c

Rasdaman’s convention is to use the first point as the translation point, and constructs the vectors generating the subspace from the differences. There is no particular reason not to use another point in the WKT list; however, knowing this, users should be aware that affine subspaces differ slightly from vector subspaces in that the following two queries differ:

select clip( c, subspace(10 10 10, 0 0 10, 10 0 10) )

from test_grey3d as c

select clip( c, subspace(0 0 0, 10 10 0, 0 10 0) )

from test_grey3d as c

The two queries have the same result domains of [0:10,0:10], and the projection

for both lie on the first 2 coordinate axes since the 3rd coordinate remains

constant; however, the data selections differ because the subspaces generated by

these differ, even though the generating vectors of (1 1 0) and (0 1 0)

are the same.

Even though the bounding box where one searches for solutions is the same

between these two queries, there is no way to reach the origin with the vectors

(1 1 0) and (0 1 0) starting at the base point of (10 10 10) because

neither vector can impact the 3rd coordinate value of 10; similarly, starting at

(0 0 0) must leave the third coordinate fixed at 0. There is nothing special

about choosing the first coordinate as our base point – the numbers might

change, but the resulting data selections in both queries would remain constant.

The following two queries generate the same subspace, but the latter has a larger output domain:

select clip( c, subspace(0 0 0, 1 1 0, 0 1 0) )

from test_grey3d as c

select clip( c, subspace(0 0 0, 1 1 0, 0 1 0, 0 0 0, 1 2 0) )

from test_grey3d as c

As much redundancy as possible is annihilated during a preprocessing stage which uses a Gram-Schmidt procedure to excise extraneous data imported during query time, and with this algorithm, rasdaman is able to determine the correct dimension of the output domain.

Some algorithmic caveats:

The complexity of searching for a solution for each result cell is related to

the codimension of the affine subspace, and not the dimension of the affine

subspace itself. In fact, if k is the difference between the dimension of the

collection array and the dimension of the result array, then each cell is

determined in O(k^2) time. Preprocessing happens once for the entire query,

and occurs in O(k^3) time. There is one exception to the codimensionality

considerations: a 1-D affine subspace (also known as a line segment) is selected

using a multidimensional generalization of the Bresenham Line Algorithm, and so

the results are determined in O(n) time, where n is the dimension of the

collection.

Tip: If you want a slice which is parallel to axes, then you are better off using the classic slicing style of:

select c[0:19,0:7,0] from collection as c

as the memory offset computations are performed much more efficiently.

4.10.4. Induced Operations

Induced operations allow to simultaneously apply a function originally working on a single cell value to all cells of an MDD. The result MDD has the same spatial domain, but can change its base type.

Examples

img.green + 5 c

This expression selects component named “green” from an RGB image and adds 5 (of type char, i.e., 8 bit) to every pixel.

img1 + img2

This performs pixelwise addition of two images (which must be of equal spatial domain).

Induction and structs

Whenever induced operations are applied to a composite cell structure (“structs” in C/C++), then the induced operation is executed on every structure component. If some cell structure component turns out to be of an incompatible type, then the operation as a whole aborts with an error.

For example, a constant can be added simultaneously to all components of an RGB image:

select rgb + 5

from rgb

Induction and complex

Complex numbers, which actually form a composite type supported as a base type, can be accessed with the record component names re and im for the real and the imaginary part, resp.

Example

The first expression below extracts the real component, the second one the imaginary part from a complex number c:

c.re

c.im

4.10.4.1. Unary Induction

Unary induction means that only one array operand is involved in the expression. Two situations can occur: Either the operation is unary by nature (such as boolean not); then, this operation is applied to each array cell. Or the induce operation combines a single value (scalar) with the array; then, the contents of each cell is combined with the scalar value.

A special case, syntactically, is the struct/complex component selection (see next subsection).

In any case, sequence of iteration through the array for cell inspection is chosen by the database server (which heavily uses reordering for query optimisation) and not known to the user.

Syntax

unaryOp mddExp

mddExp binaryOp scalarExp

scalarExp binaryOp mddExp

Example

The red images of collection rgb with all pixel values multiplied by 2:

select rgb.red * 2c

from rgb

Note that the constant is marked as being of type char so that the result type

is minimized (short). Omitting the “c” would lead to an addition of long

integer and char, resulting in long integer with 32 bit per pixel. Although

pixel values obviously are the same in both cases, the second alternative

requires twice the memory space. For more details visit the

Type Coercion Rules section.

4.10.4.2. Struct Component Selection

Component selection from a composite value is done with the dot operator well-known from programming languages. The argument can either be a number (starting with 0) or the struct element name. Both statements of the following example would select the green plane of the sample RGB image.

This is a special case of a unary induced operator.

Syntax

mddExp.attrName

mddExp.intExp

Examples

select rgb.green

from rgb

select rgb.1

from rgb

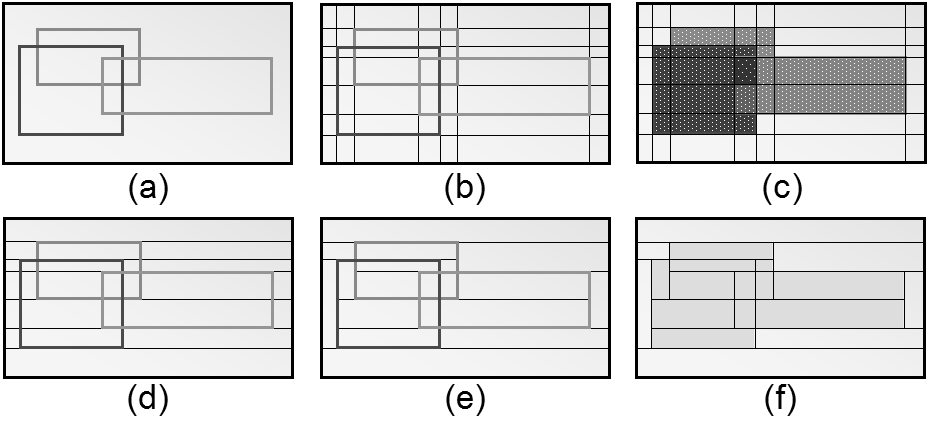

Figure 4.9 RGB image and green component

Note

Aside of operations involving base types such as integer and boolean, combination of complex base types (structs) with scalar values are supported. In this case, the operation is applied to each element of the structure in turn.

Examples

The following expression reduces contrast of a color image in its red, green, and blue channel simultaneously:

select rgb / 2c

from rgb

An advanced example is to use image properties for masking areas in this image. In the query below, this is done by searching pixels which are “sufficiently green” by imposing a lower bound on the green intensity and upper bounds on the red and blue intensity. The resulting boolean matrix is multiplied with the original image (i.e., componentwise with the red, green, and blue pixel component); the final image, then, shows the original pixel value where green prevails and is {0,0,0} (i.e., black) otherwise (Figure 4.10)

select rgb * ( (rgb.green > 130c) and

(rgb.red < 110c) and

(rgb.blue < 140c) )

from rgb

Figure 4.10 Suppressing “non-green” areas

Note

This mixing of boolean and integer is possible because the usual C/C++ interpretation of true as 1 and false as 0 is supported by rasql.

4.10.4.3. Binary Induction

Binary induction means that two arrays are combined.

Syntax

mddExp binaryOp mddExp

Example

The difference between the images in the mr collection and the image in

the mr2 collection:

select mr - mr2

from mr, mr2

Note

Two cases have to be distinguished:

Both left hand array expression and right hand array expression operate on the same array, for example:

select rgb.red - rgb.green from rgb

In this case, the expression is evaluated by combining, for each coordinate position, the respective cell values from the left hand and right hand side.

Left hand array expression and right hand array expression operate on different arrays, for example:

select mr - mr2 from mr, mr2

This situation specifies a cross product between the two collections involved. During evaluation, each array from the first collection is combined with each member of the second collection. Every such pair of arrays then is processed as described above.

Obviously the second case can become computationally very expensive, depending on the size of the collections involved - if the two collections contain n and m members, resp., then n*m combinations have to be evaluated.

4.10.4.4. Case statement

The rasdaman case statement serves to model n-fold case distinctions based on the SQL92 CASE statement which essentially represents a list of IF-THEN statements evaluated sequentially until either a condition fires and delivers the corresponding result or the (mandatory) ELSE alternative is returned.

In the simplest form, the case statement looks at a variable and compares it to different alternatives for finding out what to deliver. The more involved version allows general predicates in the condition.

This functionality is implemented in rasdaman on both scalars (where it resembles SQL) and on MDD objects (where it establishes an induced operation). Due to the construction of the rasql syntax, the distinction between scalar and induced operations is not reflected explicitly in the syntax, making query writing simpler.

Syntax

Variable-based variant:

case generalExp when scalarExp then generalExp [ when scalarExp then generalExp ]* else generalExp end

All generalExps must be of a compatible type.

Expression-based variant: